See details of post Quality score in 2017: Should you care? below

You’ve got to hand it to the folks at Google — the idea of quality score is pretty brilliant. Unlike most search engines born in the ’90s, Google realized that the success of paid search advertising was directly tied to the quality and relevance of their paid search ads.

You’ve got to hand it to the folks at Google — the idea of quality score is pretty brilliant. Unlike most search engines born in the ’90s, Google realized that the success of paid search advertising was directly tied to the quality and relevance of their paid search ads.

After all, if someone searches for “best dog food for rottweilers,” and the first result they see on the SERP is a handful of text ads selling Toyota hatchbacks, they aren’t likely to be wowed by your search engine. If people think your search engine is lousy, they won’t use it… which means no one will pay to advertise on your search engine, either.

But, if you incentivize advertisers to create ads that are relevant to a user’s search, you can maintain the quality of your SERP and still make money from paid search advertising.

The solution? Quality score.

Now, if you’ve been doing paid search advertising for a while, quality score probably isn’t a new concept. Paid search platforms like Google look at your click-through rate, ad relevance and landing page experience and assign your ads a quality score. As your quality score goes up, your average position tends to go up and/or your average cost per click tends to go down.

Seems simple enough, right? The better your quality score, the better your advertising results will be.

But is it really that simple? Sure, quality score is great for Google, but should optimizing quality score be a key part of your paid search advertising strategy? To answer that question, let’s take a look at the data.

Quality score and cost per conversion

When it comes to quality score and cost per click, the evidence is pretty clear: improving your quality score decreases your cost per click. Since your cost per conversion is essentially your cost per click divided by your conversion rate, you’d expect that improving your quality score would also improve your cost per conversion.

Sadly, that’s not how it actually works out.

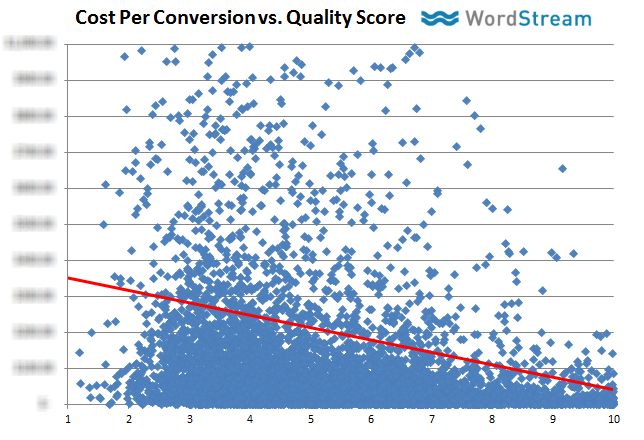

Now, you might be thinking, But Jake, I know I’ve seen research somewhere showing how a higher quality score is associated with a lower cost per conversion. And it’s true. Odds are, you’ve probably run into an article discussing the results of this study by Wordstream or this study by Portent.

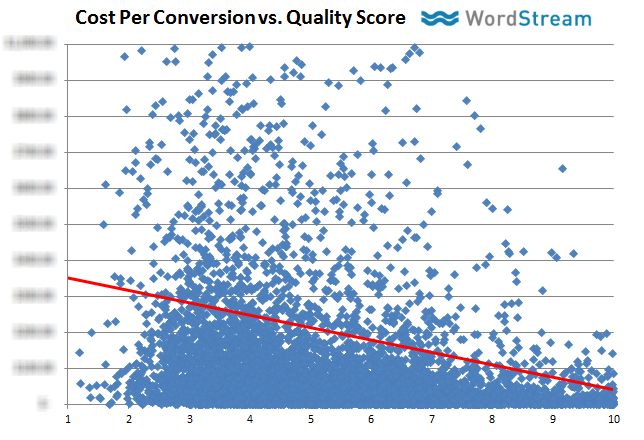

In both of these studies, cost per conversion typically dropped by around 13 to 16 percent for every point of increase in quality score.

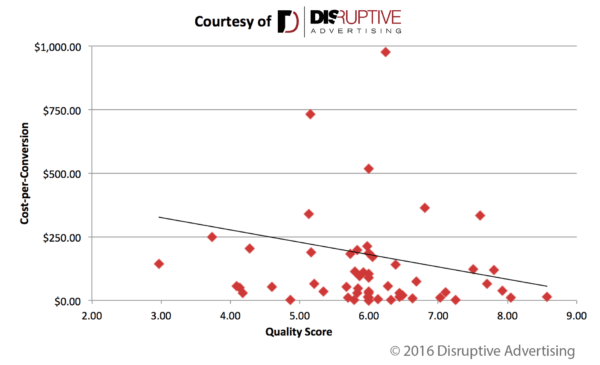

At Disruptive Advertising (my employer), we’ve audited thousands of AdWords accounts, so we decided to use our database to replicate Wordstream’s study. Not surprisingly, we got about the same results: Every point of increase in quality score resulted in a 13 percent decrease in cost per conversion.

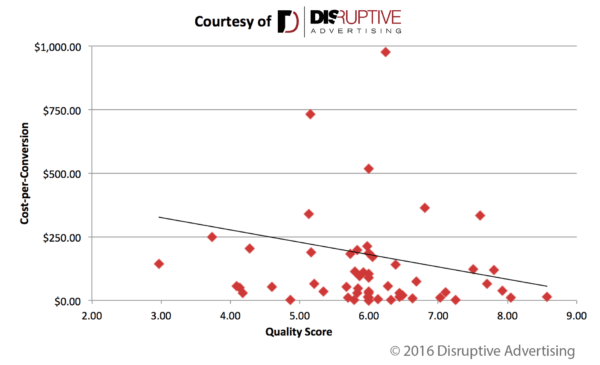

A graph with thousands of data points (like the one above) is a bit hard to interpret, so I’ve used a small representative subset of our data to make things easier below:

Given the consistency of this data, you’re probably wondering how I can say that improving quality score does not reliably decrease cost per conversion. I mean, look at the graphs! There’s clearly a connection between quality score and cost per conversion!

Or is there?

Unfortunately, while these graphs look compelling, it turns out that the trendline has an R2 of 0.012. In non-statistical speak, that means a one-point increase in quality score only actually produces a 13 to 16 percent decrease in cost per conversion about 1 percent of the time.

Would you put a lot of time and effort into a marketing tactic that only behaves predictably 1 percent of the time? Neither would I.

Why quality score is a poor predictor

There are a lot of reasons quality score is an unreliable predictor of cost per conversion. However, I believe that the biggest reason is also the simplest reason: Quality score is Google’s metric, not yours.

Quality score matters to Google because it helps Google make money, not because it helps you make money. No one sees your ad on the SERP and thinks, “My, what a fine quality score they must have! Anyone with a quality score like that deserves my business.”

While Google cares about providing a relevant experience to their users, they don’t really care about whether or not you’re sending potential customers to your page or getting conversions at an affordable price. You got your click and they got their cash, so Google’s happy.

You, however, still need to drive conversions at an affordable price.

To do that, though, you can’t rely on the metrics Google cares about. Sure, your ad might make Google happy, but if that ad isn’t driving the right people to the right page, you could be wasting a ton of money — even on a keyword with a quality score of 10!

Case in point, over the course of our AdWords audits, we’ve discovered that the average AdWords account wastes 76 percent of its budget on keywords and search terms that never convert.

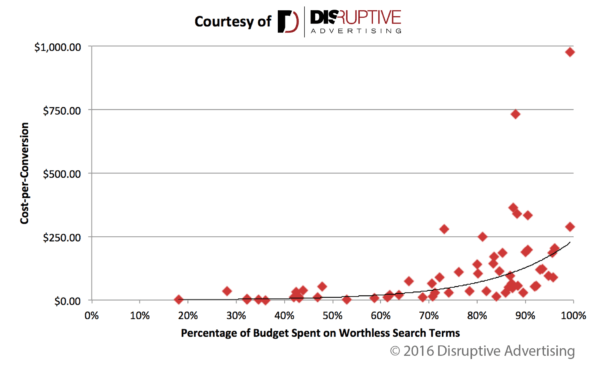

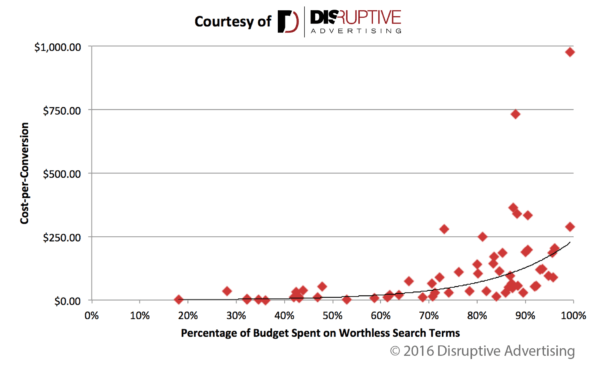

Here’s how that wasted ad spend affects your cost per conversion (using the same data subset as before):

As it turns out, this data is even scarier than the quality score data. Each 10 percent increase in wasted ad spend increases your cost per conversion by 44 to 72 percent. And, while this correlation isn’t 100 percent accurate, it has an R2 of 0.597, which means that it explains about 60 percent of your cost per conversion.

That’s a lot more compelling than 1 percent.

In fact, we’ve frequently helped clients significantly reduce their cost per conversion by reducing their wasted ad spend. For example, here’s what happened to one client as we reduced their wasted ad spend from 91 percent to 68 percent:

If you think about it, it makes sense that core account factors like wasted ad spend would have a much bigger impact on your cost per conversion than an external metric like quality score. After all, as we pointed out earlier, you can have a great quality score and still be driving people who will never buy to your site.

How to use quality score

All that being said, I still believe that quality score is a valuable metric to track and optimize. Quality score affects your cost per click and average position, which can do wonders for your account — provided that you aren’t hemorrhaging money in other areas.

If, however, you’re not wasting a ton of money on irrelevant clicks, and you feel confident in the quality of your traffic and landing page, quality score can be a great way to improve your paid search account.

First, open your AdWords account, go to the Keywords tab, and ensure that you’ve added Quality score as a column:

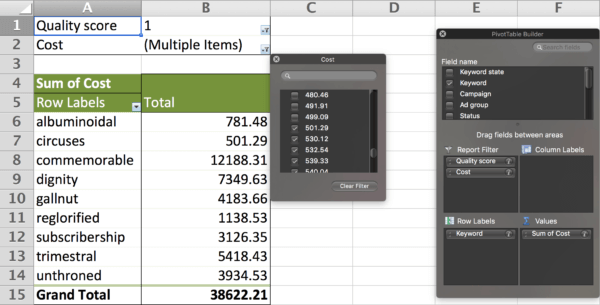

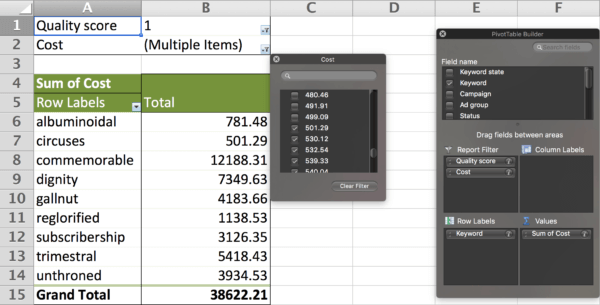

Next, pick a meaningful date range (I’m always partial to the last 6 to 12 weeks), and export your results as a spreadsheet. Open your spreadsheet in Excel, and create a pivot table:

The following settings will allow you to see how much you are spending on each level of quality score:

Looking at the data above, it looks like 12 percent of this client’s budget is being spent on keywords with a quality score of 1. If we assume that those ads are driving relevant traffic (maybe they’re bidding on the competition’s branded terms?), bumping the quality score of those ads up from 1 to 2 could save them thousands!

Alternatively, if you want to see exactly how much you’re spending on specific keywords with a given quality score, you can set your pivot table up like this:

In this case, I’ve included a filter for cost that allows me to see keywords with a quality score of 1 that the client has spent more than $500 on. This gives me nine high-priority keywords (representing the majority of ad spend on keywords with this quality score) to focus on, which should be a fairly workable number.

check out here

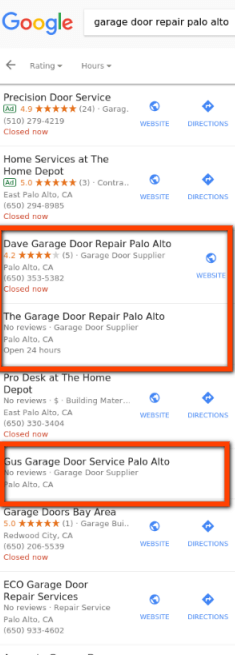

After my column about Home Service Ads came out last week, I got a message from Google with some great news. They told me two things:

After my column about Home Service Ads came out last week, I got a message from Google with some great news. They told me two things:

You’ve got to hand it to the folks at Google — the idea of quality score is pretty brilliant. Unlike most search engines born in the ’90s, Google realized that the success of paid search advertising was directly tied to the quality and relevance of their paid search ads.

You’ve got to hand it to the folks at Google — the idea of quality score is pretty brilliant. Unlike most search engines born in the ’90s, Google realized that the success of paid search advertising was directly tied to the quality and relevance of their paid search ads.