check out this post on Regions And Cities Working Together For A Better Future

Yesterday I attended a meeting of the Committee of Regions in Brussels. Organised by Age Platform Europe, the auditorium was packed with enthusiastic local and regional government officials representing around 300 municipalities, towns and cities from across Europe.

We learned about imaginative projects designed to match the needs of ageing communities to the mutual benefit of local businesses and solution providers.

Considering the diverse geographic, political and cultural background of the delegates it was heartening that general consensus seemed to be along the lines of: As we live longer, our aspirations should be expanding, necessitating new outlets for our skills and creativity in order to stay healthy, active and engaged members of society, long into what is currently termed ‘retirement’.

Yet, here we are, two decades into the twenty-first century with popular media still referring to the ‘silver tsunami’, a doom-laden metaphor coined in the late-twentieth century to describe population ageing.

Maybe it’s time to press reset, to redefine the common perception of age to stages of life (study, work, retirement). This wisdom, termed ‘chronologism’ by sociologist Michael Young, is also rooted in history and has long passed its’ sell by date.

Adopting a more agile approach to the way we manage our lives in what author Klaus Schwab has dubbed the ‘Era of Digital Transformation’ could have a significant impact on our health and wellbeing across the life course. Moreover, ‘Agile Ageing’, is a trillion-dollar business opportunity which cuts across health, social care and housing; and it is ripe for development.

Neighbourhoods of the Future

Earlier this year the Agile Ageing Alliance (AAA) published a white paper: Neighbourhoods of the Future- Better Homes for Older Adults – which concludes that a new breed of Cognitive Home could have a transformative effect on how we age. Facilitated by innovations in technology, business and service models, our homes could empower us to enjoy more meaningful, creative and independent lives well into old age; radically transforming our relationship with public services; creating new opportunities for learning and social engagement; leading to a reduction in the financial burden on State and citizens.

Smart business

Our homes are getting smarter through basic innovations such as smart meters and smart speakers, but this is just the beginning. Digital technologies, digital infrastructure and data production are already revolutionising our lives in so many ways and it won’t be too long before they are integral to our homes, enriching our lives and the lives of our friends and loved ones; facilitating a greater degree of interaction and communication, personalised support and preventative care, and enabling health and human services to be delivered remotely.

In truth, there’s a whole new phase of life up for grabs which nobody has catered for. Now, with the convergence of potentially game changing assistive technologies we have a golden opportunity to rethink the outlook for ageing populations and provide a much needed boost to the Silver Economy.

A 21st Century Cooperative

The big question is who will own our homes and of course the data we generate? For entrepreneurs and startups this is a fantastic business opportunity. But, to challenge the status quo I believe we need to rethink the development model. Why not involve public funders, SMEs, academic researchers and investors in a more equitable partnership with corporates, the stakeholders best qualified to create sustainable brands?

By investing in a cooperative for the 21st Century, in a spirit of open innovation and collaboration, corporate mentors will be able to tap into a fresh stream of passionate, innovative and potentially disruptive talent, while the SME gains access to a global ecosystem, assets, expertise and confidence.

If we don’t act, business as usual will see the more aggressive US tech giants establishing proprietary platforms and hoovering up promising incumbents, which will make it extremely difficult for European businesses to prosper thereafter. The land grab has started with the likes of Amazon, Google and Apple sizing up healthcare and the connected home, with voice activated products like Amazon Echo and Google Home making the early running.

We have been warned.

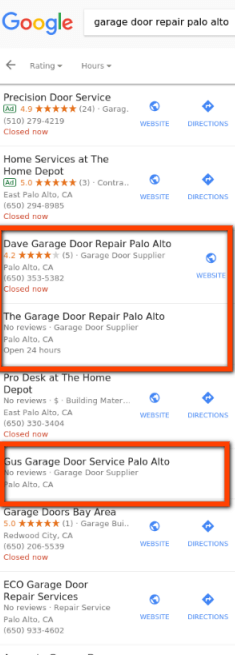

After my column about Home Service Ads came out last week, I got a message from Google with some great news. They told me two things:

After my column about Home Service Ads came out last week, I got a message from Google with some great news. They told me two things:

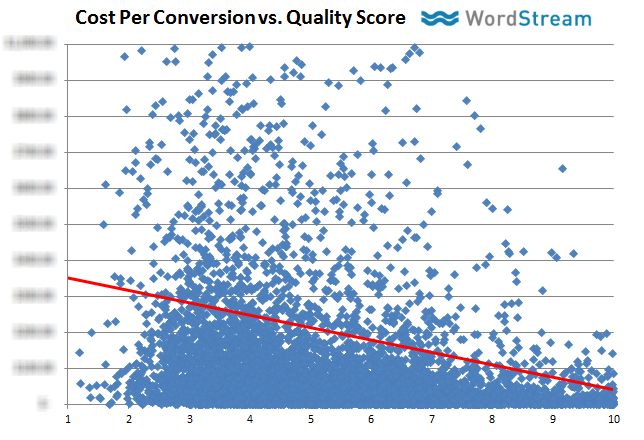

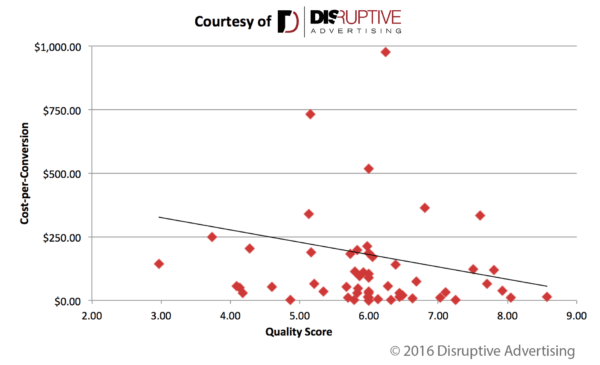

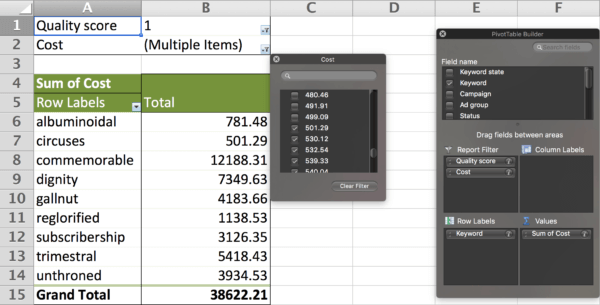

You’ve got to hand it to the folks at Google — the idea of quality score is pretty brilliant. Unlike most search engines born in the ’90s, Google realized that the success of paid search advertising was directly tied to the quality and relevance of their paid search ads.

You’ve got to hand it to the folks at Google — the idea of quality score is pretty brilliant. Unlike most search engines born in the ’90s, Google realized that the success of paid search advertising was directly tied to the quality and relevance of their paid search ads.